Introduction

Nowadays, in the 3D-programming world, everybody is talking about multi-texturing and all the cool things you can use it for.

But there are many different multi-texturing techniques that already require all the texture processing power in an accelerator, or even more.

And besides, traditionally, the main reason for using a texture in 3D rendering is that you can add a lot of detail in one fell swoop.

Fog, on the other hand, is usually a very low-resolution effect. Its main use so far is what's called "distance-fog",

which is the use of fog to smoothly eliminate excess geometry that's a certain distance from the point of view,

and to prevent popping as new geometry gets close enough to become visible.

But fog can also be used to enhance the atmosphere and realism of a game or 3D scene.

The techniques used to do that are usually called "volumetric fog techniques".

What this class focuses on is how not to use those precious texture units for implementing different volumetric fog techniques.

Instead, it is based on the premise that the geometry will be "dense enough" to avoid any major visible artifacts.

"Dense enough" will have to be defined and adjusted by the engineer when he or she is using these techniques.

Getting more colors out of your fog

In order to use vertex-interpolated volumetric fog effectively, there's a missing feature in today's consumer-level graphics hardware,

and that is the ability to specify a fog color that changes throughout a mesh. To illustrate this,

imagine an underwater scene where you use volumetric fog to darken the entrance into a cave.

The normal, distance fog should be blue or green for the water, while the volumetric fog for the cave should be black.

Now imagine, within that scene, a long eel emerging from the cave. The far end of the tail should be completely fogged in black,

while the head should be fogged normally with the blue-green underwater distance fog.

The method I propose to overcome this limitation is a little abuse of the specular and diffuse components present in all of today's

graphics hardware. So, here's how those components are commonly used:

Fragment = texture * diffuse + specular

where Fragment represents the resulting color for a rendered pixel, texture is the surface's color normally read from a texture,

diffuse is the interpolated diffuse color and specular is the interpolated specular color.

All colors range from zero (black) to one (white), and the formulas are applied to all the components (red, green and blue) independently.

Now, what we really want is something like:

Fragment = texture * diffuse * fog + specular * fog + fogColor * (1 - fog)

where fog is the interpolated fog intensity, and fogColor is the interpolated fog color. Values for fog range from zero

(fully covered by the fog) to one (not covered by the fog). The trick consists in reorganizing the math in this manner:

Fragment = texture * diffuse * fog + specular * fog + fogColor * (1 - fog)

So, if we make:

newDiffuse = diffuse * fog

newSpecular = specular * fog + fogColor * (1 - fog)

now we have again:

Fragment = texture * newDiffuse + newSpecular

which is the original formula supported by all current graphics hardware.

There is only one problem with this method: the hardware will interpolate the newDiffuse and newSpecular values linearly,

which means that neither one of the original interpolated values that we wanted (diffuse, specular, fog and fogColor) will interpolate linearly.

This causes the polygons to have different pixel colors inside than if we had had independent interpolators. The visual results are not bad,

anyway, and can be lessened by careful use of finer meshes.

This technique is not only useful to render volumetric fog. It can also be used to render special effects without requiring additional geometry.

Among others, you can have fog gradients (very useful in underwater environments and sunsets) and directional fog colors cues,

like nuclear explosions in the far distance.

Implementation

Implementation details will be discussed in the lecture during the GDC.

Also, demos will be shown, demonstrating the different features and problems that this technique presents,

and a short study on how some of this could be implemented using DirectX 8 vertex shaders.

Also, alternative math formulas and adjustments will be discussed, with the aim of improving the visual quality and reducing the impact of visual artifacts.

All demos, slides and additional material will be put available for download after the conference.

Conclusion

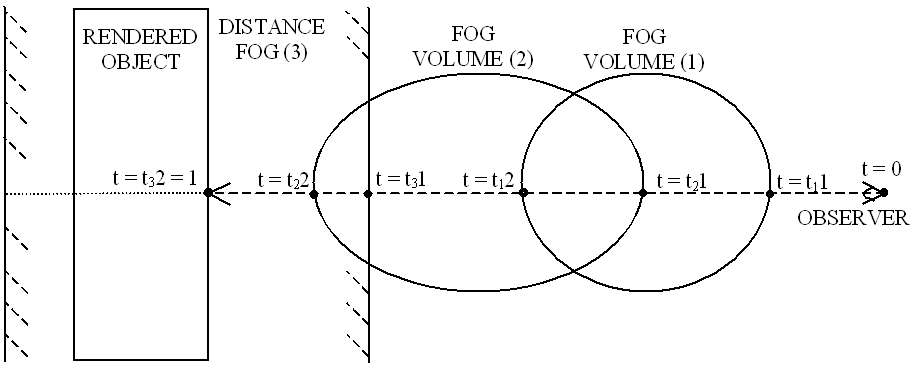

The volumetric fog technique presented is an interesting application of the concepts of ray casting, renderer interpolators and 3D geometry math,

and the range of effects that can be achieved is very encouraging.

Nevertheless, the technique isn’t free of problems. The most important, visually, is that small fog volumes can reveal the polygon mesh layout along their edges.

In order to avoid this, the fog volumes must be sensibly larger than the polygons rendered. Also, computationally,

this technique puts a heavy strain on the vertex processing pipeline. This can make it unsuitable for some purposes.

In any case, the technique is proven (it was used in the Ripcord Games title "Armor Command") and definitely worth studying in detail.

All trademarked things I mention here are TM by their respective owners. If you are one of those owners and want to be specifically mentioned, please, contact me and

I'll include it.

Go back to the main index of JCAB's Rumblings

Wow!  hits and increasing...

hits and increasing...

To contact JCAB: jcab@JCABs-Rumblings.com

Last updated: Wednesday, 14-Nov-2001 23:37:48 PST